Can AI Perform as Well as a Lawyer? Lessons from Our First 4 Months in Market

.png)

We've all seen the headlines: AI aces the bar exam, AI hallucinates in court documents, AI competes in legal moots. But beneath the sensational stories lies a more pressing question for the legal industry: Can AI actually operate in commercial settings to the standard we would expect of a practicing lawyer?

That's exactly what we set out to discover at Veraty.ai.

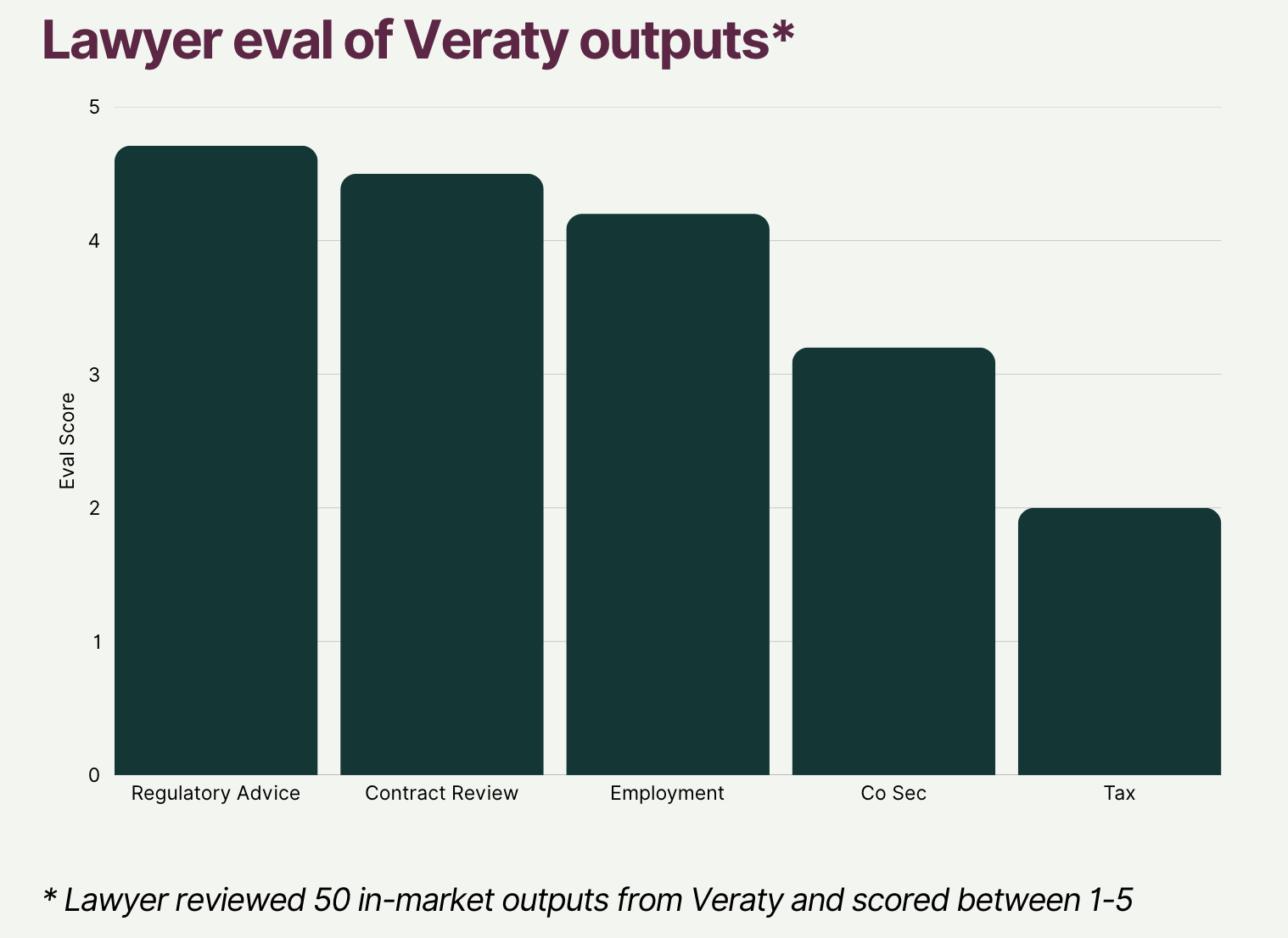

After four months of deploying our AI in-house counsel, Veraty, we've partnered with the expert legal team at Zed Law to conduct a comprehensive assessment of our AI's performance.

The results? Both encouraging and illuminating—offering crucial insights into where legal AI stands today and what the future holds.

The Great Legal AI Experiment: Our Methodology

Rather than relying on theoretical benchmarks or isolated test cases, we wanted to evaluate AI performance in the real world of commercial legal practice. Here's how we approached this challenge:

Our Testing Framework

We analyzed over 50 responses that Veraty provided to actual users during a concentrated 1-2 month period. Each response was meticulously categorized across two dimensions:

Subject Matter:

- Regulatory compliance

- Employment law

- Contract management

- Company secretarial (CoSec) matters

- Tax law

Task:

- Advice (offering legal advice for specific scenarios)

- Review (analyzing existing legal documents)

- Draft (creating new legal content)

Our Scoring System

We employed a straightforward 1-5 rating scale that any legal professional would recognize:

- 5: No observable errors or omissions (lawyer-quality work)

- 4: 1 minor observable error or omission

- 3: 1 to 3 minor observable errors or omissions

- 2: 1 major observable error or omission (or 3 to 5 minor errors)

- 1: More than 1 major observable error or omissions (or 5+ minor errors)

This scoring system allowed us to measure not just accuracy, but the practical usability of AI-generated legal work in commercial settings.

The Results: Where AI Shines and Where It Struggles

AI's Standout Performance Areas

Our analysis revealed that Veraty performed exceptionally well in three critical areas:

1. Regulatory Advice

The AI demonstrated impressive accuracy in answering regulatory compliance questions, likely due to the structured nature of regulatory frameworks and the abundance of high-quality public information in this domain.

2. Employment Q&A and Drafting

Employment law queries and document drafting showed consistently strong performance, suggesting that AI can handle routine HR legal matters with minimal human oversight.

3. Contract Review

Perhaps most significantly for commercial practice, our AI excelled at contract analysis and review—a time-intensive task that forms the backbone of much legal work.

Areas Requiring Human Intervention

However, the results also highlighted clear limitations. Veraty needed human intervention in:

- Company Secretarial (CoSec) matters: jurisdiction-specific forms, filings, and constitution-specific procedural requirements.

- Tax law questions and drafting: particularly matters involving a complex interplay between constantly evolving tax legislation in different jurisdictions, case law precedents and highly fact-specific circumstances.

- Drafting complex documents from scratch: particularly contracts that do not have volumes of public-available precedents or best practice documents, or contracts that require bespoke or unique amendments.

These areas typically involve nuanced judgment calls, intricate regulatory requirements, and the need for creative legal solutions that current AI struggles to provide.

Three Critical Learnings That Will Shape Legal AI's Future

1. Human Lawyers Still Have Broader Competency than AI

Our findings confirm what many legal professionals suspect: AI is not yet capable of matching the broad competency of human lawyers. While AI performed exceptionally well in some areas, the results suggest that we'll still need human-in-the-loop approaches for several topics for the foreseeable future -, or we must deliberately narrow the scope of what we ask AI to handle.

This isn't necessarily a limitation—it's a realistic assessment that can guide practical implementation strategies.

2. The Quality Equation: Garbage In, Garbage Out

A fundamental principle from data science proved crucial in our legal AI context: outputs are only as good as the inputs. This manifested in two key ways:

Data Quality Challenges: Areas with noisy or limited high-quality public information required significantly more human intervention. Legal domains with sparse, inconsistent, or low-quality training data simply cannot produce reliable AI outputs.

Context is King: The AI needed comprehensive context to deliver lawyer-quality answers. Vague questions or insufficient background information inevitably led to subpar responses.

3. The Path Forward is Clear (and Promising)

Despite the limitations, our results in regulatory Q&A, employment law, and contract review demonstrate that high-quality legal AI is not just feasible—it's already emerging. The strong performance in these areas suggests we're not decades away from practical legal AI, but rather 2-3 years.

Our Next Steps: Building Better Legal AI

Based on these insights, we're implementing three strategic improvements:

1. Strategic Human-AI Collaboration

We're implementing lawyers-in-the-loop processes for all areas where human intervention proved necessary. Rather than viewing this as a failure, we see it as a practical hybrid approach that leverages the strengths of both AI and human expertise.

2. Enhanced Input Quality

While we already maintain a template base of 100+ legal documents for contract drafting, we're completely reimagining how we utilize these inputs to generate stronger, more reliable outputs. We're also re-designing some of our systems to collect better inputs before providing outputs.

3. Expanded Evaluation Systems

We're significantly broadening our evaluation processes beyond our current systems, implementing more comprehensive quality assurance measures and creating greater visibility into AI performance across all legal domains.

The Bigger Picture: What This Means for Legal Practice

Our four-month experiment offers a realistic snapshot of where legal AI stands today. The technology shows remarkable promise in specific, well-defined areas while revealing clear limitations in others. For legal professionals, this suggests a future of strategic AI adoption rather than wholesale replacement.

The teams that will thrive are those that thoughtfully deploy AI to solve their legal needs while keeping pace with the constant clip of advancement - using it to enhance efficiency in appropriate areas while maintaining human oversight for complex, nuanced work.

Want to learn more about what we're building? Check out Veraty.ai. If you know of innovative ways to better assess the quality of legal AI models in commercial settings, we'd love to hear from you.

What's your experience with legal AI? Are you seeing similar patterns in your business?

.avif)

.svg)